Linux Rice V2.5: ags + AI

Introduction

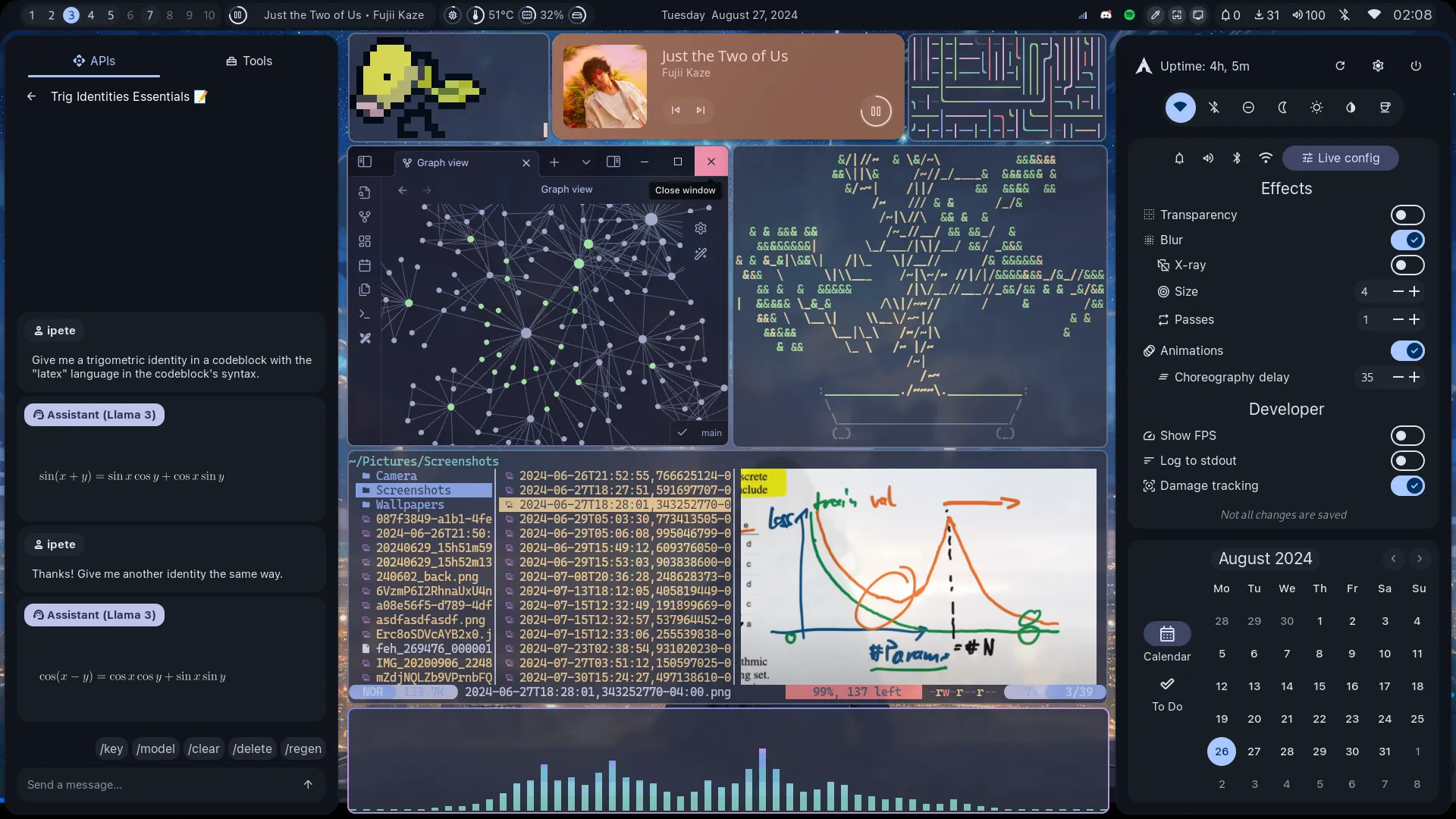

This rice is a continuation of my Wayland + Hyprland rice from 2023. All my Hyprland and application based configurations remained the same. The only biggest change was replacing EWW with Ags and some AI applications. In addition to a LLM assistant, I also have scripts for live translations in my terminal using OpenAI’s Whisper, and image generation with AUTOMATIC1111’s Stable Diffusion web UI.

Aylur’s GTK Shell (ags)

Aylur’s GTK Shell is a library inspired by EWW for building GTK widgets with GNOME Javascript. Ags offers a much more comfortable developing experience than EWW due to its familiar language and better debugging features. I also found responsiveness and memory to be more efficient with ags. The only gripes I have with ags are the same issues that plagues every Javascript/Typescript developer. Nonetheless, its integration with Hyprland and Wayland has made it a treat to create large scale widgets with. I’ve based my configuration on top of the underlying services created by end-4’s configuration.

Bar

The bar is essentially a recreation of my EWW bar from my previous rice. Only styling and a few interactions were changed from the previous bar. From left to right the full feature list of the bar are as follows:

- Workspaces

- Music Player

- Date & Weather

- System tray

- Useful buttons

- Color picker

- Screenshot tool

- Wallpaper changer

- Notifications

- Updates

- Volume

- Bluetooth connection

- Internet connection

- Time

Panels

The left panel from last years EWW config was overhauled over to ags. The left panel now holds the newly added AI assistant and quick access to some miscellaneous but useful scripts. A right panel was added which contains quick system toggles, notifications, volume mixer, bluetooth and internet lists, appearance settings, a calendar, and TODO list.

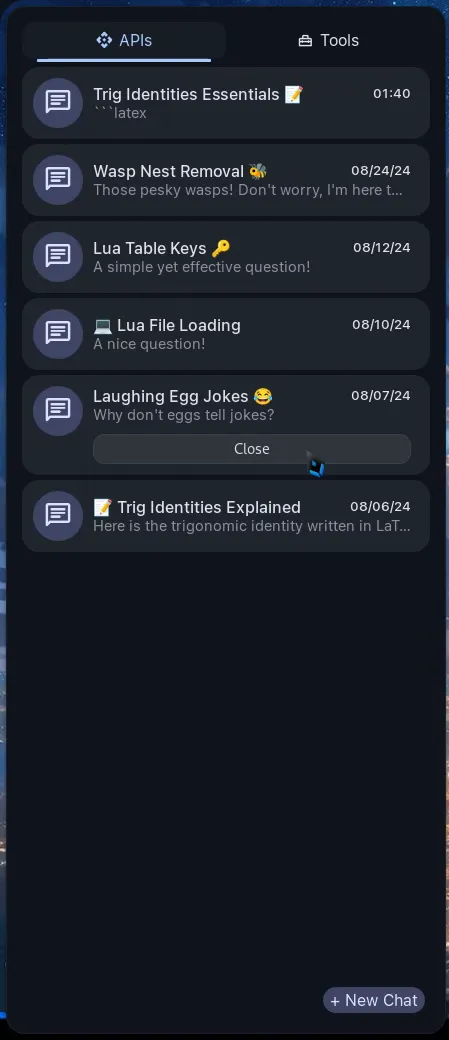

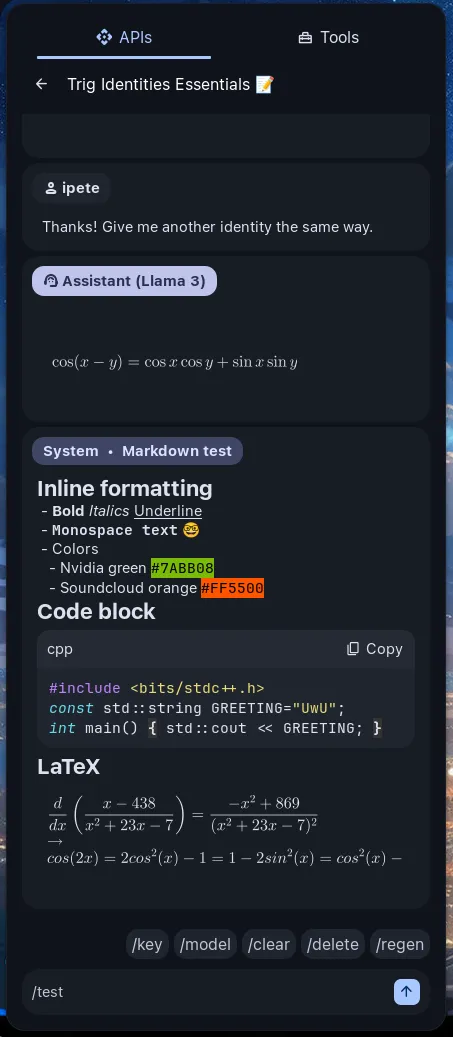

Local AI Assistant

In the left panel is a chat interface frontend for interacting with an Ollama. Ollama acts as a user friendly interface to llama.cpp for inferences LLMs. This allows me to locally run my own LLMs without being dependent on 3rd party APIs. With my hardware I can comfortably run 7-8b parameter models along my normal GPU use. Multiple chat conversations can be created and deleted. Chats are encrypted and stored and fetched from a sqlite database. Chat titles are automatically generated with a templated prompt. The model’s settings, such as temperature, can be adjusted in a settings panel. Currently model selection is handled within a user configuration file. The main models I jump between are llama3 8b llama3.1 8b, codellama 7b, and dolphin-mistral 7b. I have done some work into finetuning a more personalized model using QLoRA with my own data but forwent it due to mixed results and my own ethical considerations.

A chat conversation is rendered in markdown. This includes different typography, color syntax, codeblocks, and latex blocks. Codeblocks also render with a copy button to quickly copy its content to the system clipboard. The chat also features the same tools many LLM web services provide. This includes deleting the last messages sent and regenerating a message. In the backend, there are some prompts prepended to each conversation to help the model be more useful as a desktop assistant.

| Chat List | Chat with Markdown Test Example |

|---|---|

|  |

Workflow

Development

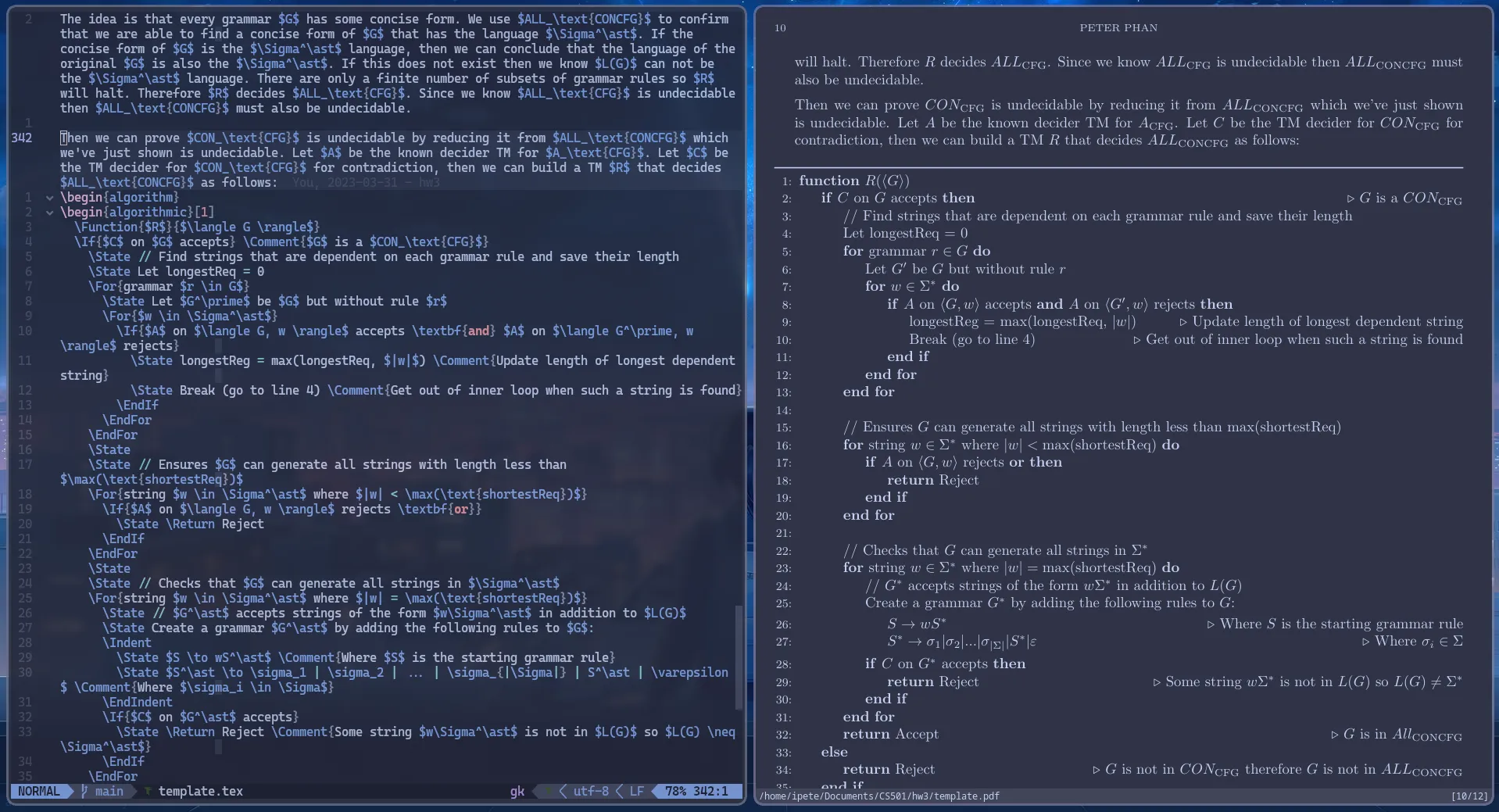

My primary editor is my neovim configuration. The secondary editor is use is VSCode. If I require the use of a full-fledged IDE I have configured IntelliJ IDEA CE to be used. I use QEMU and the Libvirt GUI to create and manage virtual machines.

Writing

My latex writing setup comprises of the vim plugin vimtex and the PDF viewer Zathura.

Notetaking

I use a combination of Neovim, Obsidian.md, Zotero, and Krita for notetaking. I use these applications mostly stock. The notable plugins I use for Obsidian are vim and excalidraw.