Emoji Doodler

Facial Emoji Recognition Bot

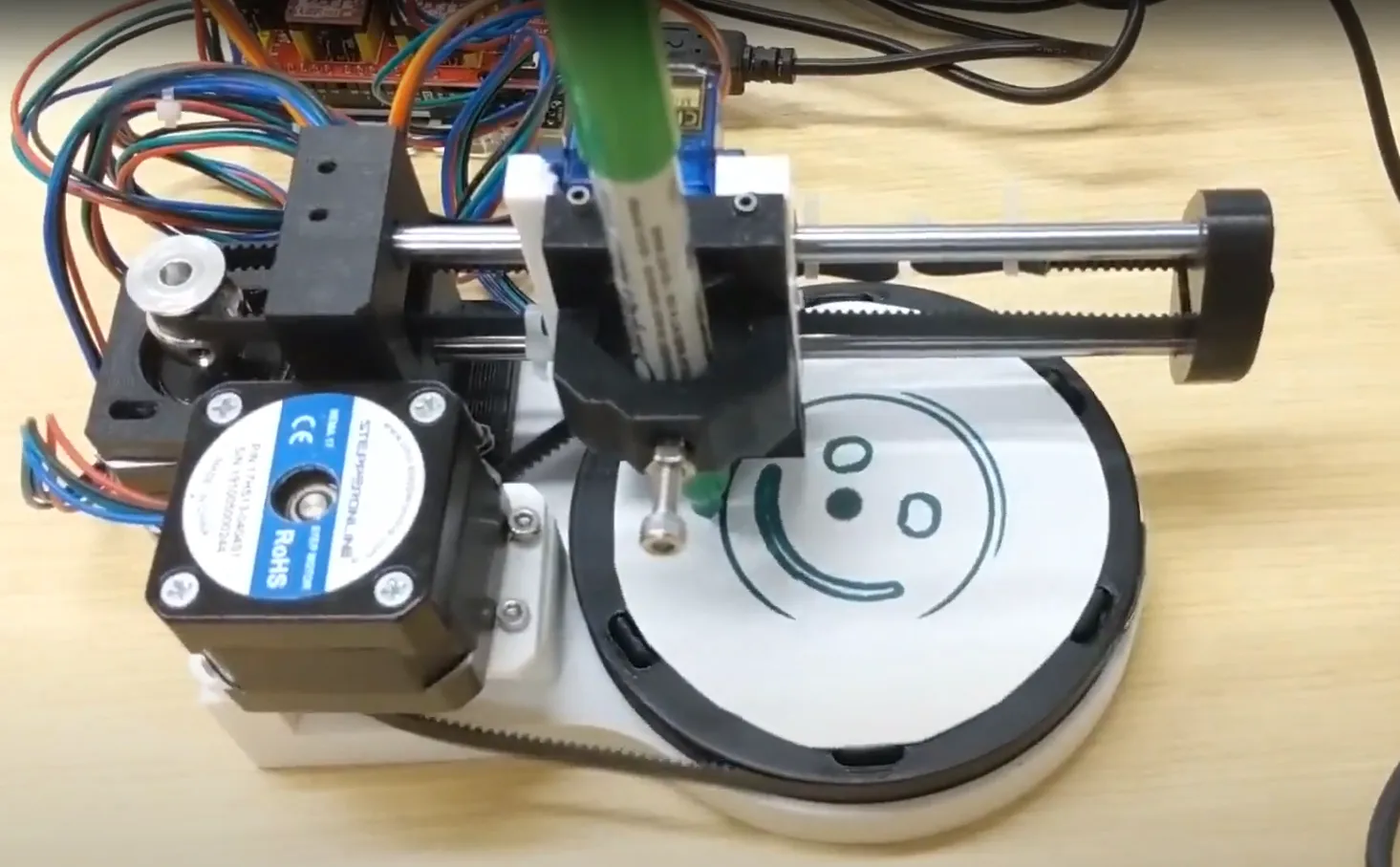

The FER-BOT is a polar based drawing machine. The robot aims to draw emoticons that best represent a given human facial expression. It uses a trained machine learning model to classify facial expressions of various emotions. Once the robot identifies a subject’s expression, it attempts to draw the corresponding emoji. The machine accomplishes the drawing process through a linear arm and rotating base. This mechanism was chosen because it means the robot will have a smaller footprint optimized for drawing emoticons. The drawing is powered by an Arduino using G-Code instructions.

Hardware

The drawing machine’s movement is powered by two NEMA 17 stepper motors and one servo. The controller for these motors is an Arduino Uno with a CNC shield. The stepper motors are powered by a 12V power supply through the CNC shield and the stepper drivers. The servo motor is powered by the Arduino Uno on a 5V pin. The two stepper motors control the radius ($r$) and the degrees ($\theta$) axis with the help of timing belts. The servo motor acts as the z-axis.

The machine’s body consists of 3D printed parts and various bearings. The turntable has bearings in its center axis as well as smaller bearings underneath it to give a smoother glide. On the arm there are a set of bearings for the horizontal movement and another set of bearings for the vertical movement. Everything is held together by screws, zip ties, friction, and tissues.

Software

The face detection and facial expression recognition is handled by a python script that utilizes the laptop’s webcam. The script uses an arbitrary trained CNN model to recognize the classes of expression: Angry, Disgust, Fear, Happy, Sad, Surprise, Neutral. Once an emotion is confidentally classified, it is sent to the Arduino to print the corresponding emoji.

The firmware that is used to control the machine’s movement is a custom fork of GRBL. This specific fork alters the spindle pin to control a servo motor for the z-axis. The $r$ axis in GRBL is represented by the x-axis, and the $\theta$ axis is represented by the y-axis. The GRBL settings were calibrated precisely for the drawing area. There is also preprocessing code that converts regular cartesian G-code into polar coordinates.

The immediate obstacle for this implementation is the conversion of cartesian instructions into polar coordinates. This is overcame with the help of a script written by Barton Dring which was used to convert directions for the necessary emojis.

Facial Recognition Model

Three separate models of different designs where trained as part of a case study on CNN architectures. These models include the classic AlexNet and ResNet, as well as the state of the art Vision Transformer (ViT). For the case study, we trained the models on the Facial Expression Recognition (FER-2013) dataset by Ian Goodfellow. This dataset includes data for 6 labels of facial expressions. The goal was to compare and contrast the training results of these models (details).

The final test accuracy of the ResNet and ViT models were comparable at ~60% so the ResNet model was chose for the drawing robot’s AI. Note that human accuracy on the same FER-2013 dataset is $65 \pm 5%$ (arXiv:1307.0414). Below is a more detailed breakdown of the test accuracy on the different expressions.

Once the model was trained, it is inserted into a Python script that feeds it snapshots from a webcam using the OpenCV-Python library. The script first detects a face within a frame of an image, then a crop of the face is taken to be sent to the model. Once the model outputs its prediction, it is displayed in the console along with a “confidence graph”. This entire process is repeated every few seconds as shown in the demo.